Neuromorphic Computing: When AI Hardware Mimics the Brain

2025-06-18

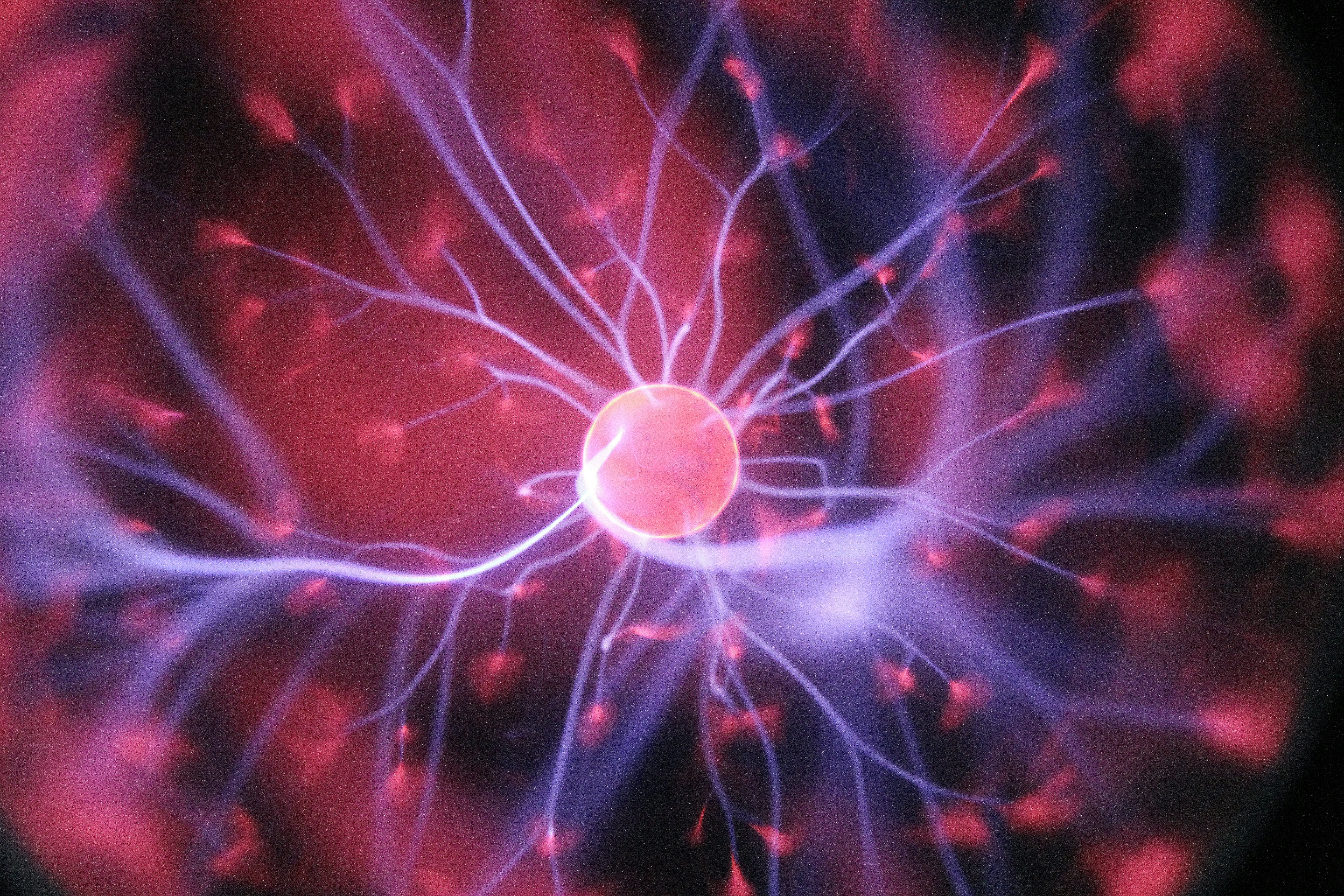

Traditional computing architectures have served us well for decades, but they're hitting fundamental limits when it comes to efficiently running AI workloads. Enter neuromorphic computing—a revolutionary approach that designs hardware to mimic the structure and function of the human brain. By blending neuroscience with computer engineering, neuromorphic systems promise dramatically more efficient AI that can learn, adapt, and operate more like biological intelligence. Let's explore how this brain-inspired technology is transforming the intersection of hardware and AI.

The Biological Inspiration

The human brain is arguably the most sophisticated computing system in existence—capable of incredible feats of pattern recognition, learning, and adaptation while consuming just 20 watts of power. Conventional computers, by contrast, require orders of magnitude more energy to perform similar tasks.

Neuromorphic computing draws inspiration from several key aspects of brain architecture:

-

Spiking neurons: Unlike traditional binary logic, biological neurons communicate through discrete electrical pulses or "spikes." This event-based processing is inherently sparse and energy-efficient.

-

Massive parallelism: The brain contains approximately 86 billion neurons with trillions of connections, all operating in parallel.

-

Co-located memory and processing: Unlike von Neumann architectures that separate memory and computation, the brain processes and stores information in the same physical structures.

-

Plasticity: Neural connections strengthen or weaken based on activity patterns, enabling learning without explicit programming.

Spiking Neural Networks: The Software Side

At the heart of neuromorphic computing are Spiking Neural Networks (SNNs), which model information transmission using discrete spikes rather than continuous values. This approach offers several advantages for certain applications:

# Simple example of a leaky integrate-and-fire neuron in Python

class LIFNeuron:

def __init__(self, threshold=1.0, leak_rate=0.1):

self.membrane_potential = 0.0

self.threshold = threshold

self.leak_rate = leak_rate

def forward(self, input_current):

# Integrate input

self.membrane_potential += input_current

# Check for spike

if self.membrane_potential >= self.threshold:

spike = 1

self.membrane_potential = 0 # Reset after spike

else:

spike = 0

# Apply leak

self.membrane_potential *= (1 - self.leak_rate)

return spikeThis simple model captures the essence of neuronal behavior: accumulating input until a threshold is reached, firing a spike, and then resetting. Unlike traditional deep learning where every neuron activates on every forward pass, spiking neurons are active only when necessary, leading to sparse, energy-efficient computation.

Libraries like BindsNET, Norse, and Nengo make it easier to build and train SNNs:

# Example using Norse library for SNNs

import torch

import norse.torch as norse

# Create a simple spiking network

layer = norse.LIFCell(2, 10)

spikes = torch.ones(5, 2) # Batch size 5, 2 input neurons

output, state = layer(spikes)Neuromorphic Hardware: Beyond Silicon

While software simulations of SNNs run on conventional hardware, true neuromorphic computing requires specialized chips designed from the ground up for brain-like processing. Several groundbreaking projects are leading the way:

Intel's Loihi

Intel's Loihi chip incorporates 130,000+ neurons and 130 million synapses per chip. What makes it special is its asynchronous, event-driven design that processes information only when neurons fire, dramatically reducing power consumption.

Loihi Architecture Highlights:

- 128 neuromorphic cores

- Each core simulates 1,024 neurons

- On-chip learning capabilities

- Mesh communication network

- ~1000x more energy-efficient than GPUs for certain workloadsIBM's TrueNorth

IBM's TrueNorth chip contains 1 million digital neurons and 256 million synapses. Despite this complexity, it consumes just 70 milliwatts—thousands of times more efficient than conventional processors for neural network tasks.

BrainChip's Akida

BrainChip's Akida is one of the first commercially available neuromorphic processors, designed specifically for edge AI applications where power efficiency is critical.

Programming Paradigms for Neuromorphic Systems

Developing for neuromorphic hardware requires rethinking traditional programming approaches. These systems don't follow the sequential, deterministic model of conventional computing.

Event-Based Programming

Neuromorphic systems are inherently event-driven, processing information only when something changes:

# Conceptual example of event-based programming for neuromorphic hardware

def on_event(event_data):

# Process incoming spike event

if event_data.neuron_id in attention_neurons:

# Trigger attention mechanism

focus_attention(event_data.coordinates)

# Route event to appropriate processing pathway

if event_data.source == "visual":

visual_processing_network.input_spike(event_data)Learning Rules

Instead of backpropagation, neuromorphic systems often use biologically-inspired learning rules:

# Simplified STDP (Spike-Timing-Dependent Plasticity) implementation

def update_synapse_weight(pre_spike_time, post_spike_time, current_weight):

time_diff = post_spike_time - pre_spike_time

if time_diff > 0: # Post-synaptic neuron fired after pre-synaptic

# Strengthen connection (Long-Term Potentiation)

weight_change = A_plus * math.exp(-time_diff / tau_plus)

else:

# Weaken connection (Long-Term Depression)

weight_change = -A_minus * math.exp(time_diff / tau_minus)

return current_weight + weight_changeReal-World Applications

Neuromorphic computing is finding applications across various domains:

Autonomous Systems

Self-driving cars and drones benefit from neuromorphic vision systems that can process visual information with ultra-low latency and power consumption:

# Conceptual code for neuromorphic vision in autonomous vehicles

class NeuromorphicVisionSystem:

def __init__(self, neuromorphic_processor):

self.processor = neuromorphic_processor

self.object_detectors = self.initialize_detectors()

def process_event_stream(self, dvs_events):

# Process events from Dynamic Vision Sensor

detected_objects = self.processor.process_spikes(dvs_events)

# Make real-time decisions

if any(obj.type == "pedestrian" and obj.distance < SAFE_DISTANCE

for obj in detected_objects):

return "BRAKE"Continuous Learning Systems

Unlike traditional AI models that are trained once and deployed, neuromorphic systems can learn continuously from their environment:

# Continuous learning with on-chip plasticity

class NeuromorphicAgent:

def __init__(self, loihi_interface):

self.processor = loihi_interface

self.enable_online_learning()

def enable_online_learning(self):

# Configure STDP learning rules

self.processor.configure_learning(

learning_rule="stdp",

lr_params={"A_plus": 0.1, "A_minus": 0.12}

)

def interact_with_environment(self, sensory_input):

# Process input and learn from the experience simultaneously

action = self.processor.process_and_learn(sensory_input)

return actionUltra-Low-Power Edge AI

Perhaps the most immediate impact of neuromorphic computing is enabling sophisticated AI in power-constrained environments:

Example Power Consumption Comparison:

- Traditional CNN on GPU: ~250W

- Same CNN on Neuromorphic Hardware: ~0.1W

- 2500x power reduction for equivalent taskChallenges and Future Directions

Despite its promise, neuromorphic computing faces several challenges:

-

Programming complexity: Developing for these novel architectures requires specialized knowledge spanning neuroscience and computer engineering.

-

Standardization: Unlike the mature ecosystem around traditional computing, neuromorphic platforms lack standardized tools and interfaces.

-

Scaling challenges: Building large-scale neuromorphic systems that approach the complexity of the human brain remains a significant challenge.

The future of neuromorphic computing likely involves hybrid systems that combine traditional computing with neuromorphic accelerators for specific tasks. Companies like Intel are already exploring this approach with their Pohoiki Springs system, which integrates multiple Loihi chips with conventional processors.

Conclusion

Neuromorphic computing represents a fundamental shift in how we design and build AI systems. By taking inspiration from the brain's architecture, these systems promise orders-of-magnitude improvements in energy efficiency while enabling new capabilities like continuous learning and ultra-low-latency processing.

As neuromorphic hardware becomes more accessible and programming tools mature, we can expect to see these brain-inspired systems powering everything from smarter edge devices to more capable robots and autonomous vehicles. The gap between artificial and biological intelligence may be narrowing, one spike at a time.

For developers looking to get started with neuromorphic computing, resources like Intel's Nengo framework, BrainChip's MetaTF, and the open-source SpikingJelly library provide accessible entry points into this exciting frontier where biology meets computation.